Robot that gets bored in Zoom meetings¶

ECE 4760, Spring 2021, Adams/Land¶

HTML('''<script>

code_show=true;

function code_toggle() {

if (code_show){

$('div.input').hide();

} else {

$('div.input').show();

}

code_show = !code_show

}

$( document ).ready(code_toggle);

</script>

<form action="javascript:code_toggle()"><input type="submit" value="Click here to toggle on/off the raw code."></form>''')

import numpy

import matplotlib.pyplot as plt

from IPython.display import Audio

from IPython.display import Image

from scipy import signal

from scipy.fft import fftshift

from scipy.io import wavfile

plt.rcParams['figure.figsize'] = [12, 3]

from IPython.core.display import HTML

HTML("""

<style>

.output_png {

display: table-cell;

text-align: center;

vertical-align: middle;

}

</style>

""")

Webpage table of contents¶

Introduction¶

In this lab, you will give a very simple robot a personality.

The nature of this lab is a bit different than that of the other labs. In all of the previous laboratory assignments, you built tools of some variety. In the first lab, you built a tool for understanding birdsong. In the second, you built a tool for understanding flocking behavior. In the third lab, you built a tool for doing realtime frequency analysis. The outcome of this lab will not be a tool that could be used for some other purpose. Instead, the objective of this lab is to build something that makes you smile. The extent to which a couple of simple behaviors can give a robot a personality is remarkable. In this lab, you are tasked with creating that personality, subject to a couple constraints. One of those constraints is that the robot must react to your voice.

Hardware¶

Micro Servos¶

The "robot" used for this lab is simply composed of two micro-servos (SG90) arranged in a pan/tilt configuration. A passive piece of hardware is mounted on top of the assembly to give the appearance of eyes. Every behavior that you program will be a sequence of pan/tilt commands to the servos.

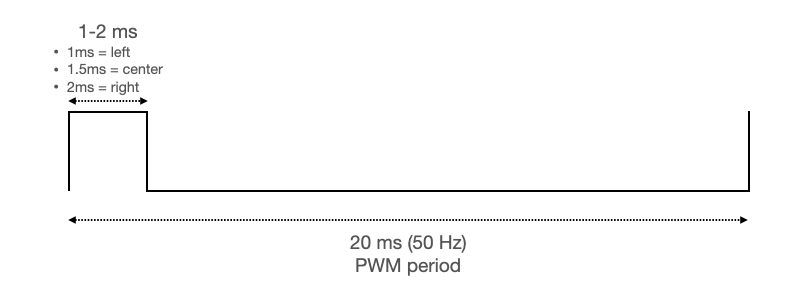

Each of these servos is controlled using pulse-width modulation. The period of the PWM signal is 20 ms (50 Hz), and the length of the pulse is 1-2 ms. A pulse-width of 1 ms corresponds to a "left" position of the servo (which can rotate 180 degrees). A pulse-width of 1.5 ms corresponds to the center position, and 2 ms corresponds to the right position. See the image below.

Note: Because of the physical arrangement of the assembly, the "tilt" servo is constrained in the range of 90-180 degrees. It cannot look "down," in other words.

In order to generate these PWM signals, you will need to setup two output compare units on a 32-bit timer. See the timer page for information about setting up a 32-bit timer and output-compare units. Note that you will need to configure the output compare units to 32-bit mode, as shown below for OC3:

OpenOC3(OC_ON | OC_TIMER_MODE32 | OC_TIMER2_SRC | OC_PWM_FAULT_PIN_DISABLE , 0, 0) ;

Connections to PIC32¶

- Serial: serial default for protothreads (1.3.2) uses pins:

PPSInput(2, U2RX, RPA1)

PPSOutput(4, RPB10, U2TX)

Be sure to uncomment#define use_art_serialin the config file. - Output compare:

PPSOutput(4, RPA3, OC3) ; // configure OC3 to RPA3

PPSOutput(3, RPA2, OC4) ; // configure OC4 to RPA2 - ADC:

SetChanADC10( ADC_CH0_NEG_SAMPLEA_NVREF | ADC_CH0_POS_SAMPLEA_AN11 ); // configure to sample AN11 - TFT: As described on the Big Board page

- Scope Probe 1:

RPA3 - Scope Probe 2:

RPA2

Probes movable upon request

Program Organization¶

Here is a suggestion for how to organize your program.

- Protothreads maintains the ISR-driven, millisecond-scale timing as part of the supplied system. Use this for all low-precision timing (can have several milliseconds jitter).

- Output-Compare ISR uses a timer interrupt to ensure a 20ms period for the PWM signals to the servos

- Sets the duty cycle for the PWM signals to each motor

- Gathers of sample from the ADC

- If that sample exceeds a certain threshold (i.e. volume), signals Personality Thread to return the motors to their pre-programmed positions.

- Main sets up peripherals and protothreads then just schedules tasks, round-robin

- Opens 32-bit interrupt timer and configures interrupt

- Opens and configures ADC

- Opens and configures output compare modules

- Sets up protothreads and schedules tasks round-robin

- Python Serial Input Thread (see here)

- Waits for serial command from Python user interface

- Places system into "Programming" or "Active" mode based on state of Python toggle switch

- In "Programming" mode, sets position of servos with slider values and stores those positions in global variables

- Personality Thread

- In the absence of audio input, manipulates the PWM signals to each motor to exhibit some personality

- When audio input is received, returns the motors to their pre-programmed positions (either instantly or slowly). The motors should remain in that position for a programmed amount of time, and then return to their personality-exhibiting mode.

Weekly checkpoints and lab report¶

Week one checkpoint¶

By the end of lab section in week one you must have:

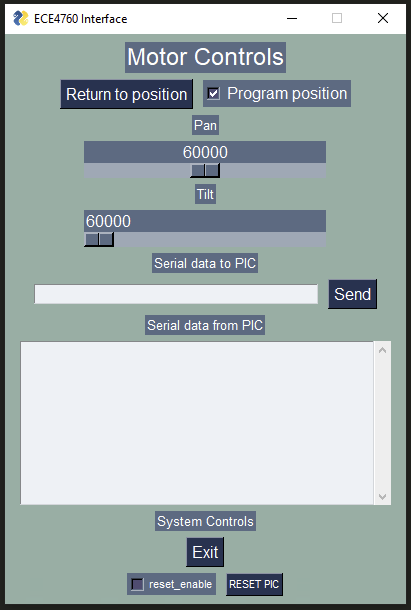

- Programming Mode working. That is, you must be able to control the positions of both servos using sliders in the Python interface. See the first 15 seconds of the video above.

- Finishing a checkpoint does NOT mean you can leave lab early!

Week two assignment¶

Write a Protothreads C program which will:

- The program will have two modes: Programming and Active.

- At reset, the program starts in Programming mode. In this mode, the positions of the servo motors are controlled by sliders in the Python user interface. The user manipulates the robot into the position to which it will return in Active mode.

- De-selecting a toggle switch in the Python interface places the system into Active mode. In this mode, the robot exhibits some behavior (i.e. looks around) until audio above a threshold volume is detected.

- When audio above a threshold volume is detected, the robot returns to its pre-programmed position for a short amount of time (1-2 seconds), and then resumes looking around.

- Selecting the "Programming Mode" toggle switch in the Python interface places the system back into Programming mode, and allows the user to select a new return position for the robot.

Write a Python program which will:

- Include two sliders (one for pan, one for tilt) that allows the user to set the pre-programmed positions for the servos

- Include a toggle switch that puts the system into programming/active mode

- Optionally includes a "Return to position" button that makes the robot return to its pre-programmed position in precisely the same way that it does in response to audio. This is not required, but nice for debugging.

At no time during the demo can you reset or reprogram the MCU.

Lab Report¶

Your written lab report should include the sections mentioned in the policy page, and:

- A heavily commented listing of your code

Opportunities to keep going¶

- Can you program multiple behaviors and personalities? Can you make your robot appear inattentive, curious, angry, etc.?

- Can you have your robot recognize different voices based on frequency content, and respond differently to each voice?

- Can you have your robot respond differently to different volumes of voice? Perhaps it exhibits one behavior when yelled at, and another behavior when calmly spoken to.

- Can you extract frequency content from music to have your robot dance?